Data Analysis Sample Work

Mastering Video Coding: A Comprehensive Dive from Tools to Consumer Deployment

Info: 2565 words Sample Data Analysis

Published: 1st December 2023

Tagged: Computer Science & IT

Abstract

Every day, there are more and more applications based on multimedia information and video material. Increasing the compression rate and other crucial aspects while maintaining a suitable rise in computer programming load is a difficulty in the creation of new video coding standards. The flexible video coding (VVC) standard was developed by the joint video experts team (JVET) of the ITU-T video coding experts group (VCEG) and the ISO/IEC moving picture experts group (MPEG). It was finally approved as the international standard 23090-3 (MPEG-I Part 3) in July 2020. This article provides an introduction to the standard's compression tools; consumer electronics use cases, real-time implementations that are already available, and the initial industrial testing conducted using the standard.

Introduction

The last two decades have witnessed exciting developments in Consumer Electronics Applications. In this framework, multimedia applications, and more specifically, those in charge of video encoding, broadcasting, storage and decoding, play a key role. Video content represents today around 82% of the global Internet traffic, according to a study recently conducted by Cisco [1], and video streaming represents 58% of the Internet traffic [2]. All these new trends will increase the part of video traffic, storage requirement and especially its energy footprint. For instance, video streaming contributes today to 1% of the global greenhouse gas emissions, which represent the emissions of a country like Spain [3]. It is expected that in 2025, CO2 emissions induced by video streaming will reach the global CO2 emissions of cars [3]. The impressive consumption of multimedia content in different consumer electronic products (mobile devices, smart TVs, video consoles, immersive and 360◦ video or augmented and virtual reality devices) requires more efficient video coding algorithms to reduce the bandwidth and storage capabilities while increasing the video quality. Nowadays, the mass market products demand videos with higher resolutions (greater than 4K), higher quality (HDR or 10-bit resolution) and higher frame rates (100/120 frames per second). All of these features must be integrated into devices with low resources and limited batteries.

Therefore, a balance between the complexity of the algorithms and efficiency in the implementations is a challenge in the development of new consumer electronic devices. Taking into account this situation, the Joint Collaborative Team on Video Coding (JCT-VC) of ITU-T and the JCT group within ISO/IEC started working in 2010 [4] on the development of a more efficient video coding standard. An example of the success of this collaboration was the high-efficiency video coding (HEVC) [5] standard. This latter [5] reduces the bit rate of the previous video standard advanced video coding (AVC) [6] to 50% for similar visual quality [7]. Presently, Versatile Video Coding [8] is the most recent video standard and, therefore, the one that defines the current state of the art. The challenge of this new video standard is to ensure that ubiquitous, embedded, resource-constrained systems are able to process in real-time the requirements imposed by the increasingly complex and computationally demanding consumer electronics video applications.

The VVC standard has been published, and, at present, several research institutions and companies are working on efficient implementations that will be included in new consumer electronics devices very soon. This paper reports in further sections some efficient implementations and trials done recently in real scenarios.

Potentials of VVC from two different points of view: improving existing services and enabling emerging ones

- Use-cases and application standards integration

- Vvc coding tools

- Complexity and coding performance

- Real-time implementations

- ♦ Real-Time VVC Decoding with OpenVVC

- ♦ Real-Time VVC Decoding with VVdeC

- ♦ Real-Time VVC Encoding with VVenC

- ♦ Real-Time VVC Encoding and Packaging with TitanLive

- First commercial trials

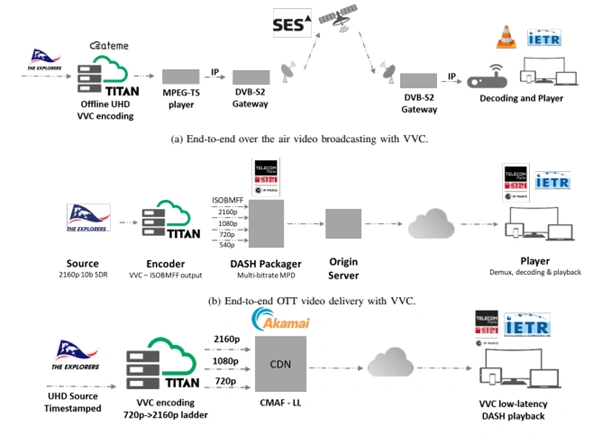

- ♦ Overview: The trial depicted in Figure 5a took place in June 2020 and is the result of a collaboration between the following entities:

- ♦ ATEME provided the encoding and packaging units.

- ♦ SES provided the satellite transponder used for the experiment as well as the gateways needed at the transmission and reception sides.

- ♦ VideoLabs provided the media player (VLC), including demuxing and playback.

- ♦ IETR provided the VVC real-time decoding library used by the VLC player

- ♦ Overview: The trial depicted in Figure 5b took place in June 2020 and is the result of a collaboration between the following entities:

- ♦ ATEME provided the encoding unit.

- ♦ Telecom Paris provided the DASH packager (MP4Box) and the player (MP4Client) from GPAC.

- ♦ IETR provided the VVC real-time decoding library used by MP4Client.

- ♦ ATEME provided the encoding unit.

- ♦ Telecom Paris provided the DASH packager (MP4Box) and the player (MP4Client) from GPAC.

- ♦ IETR provided the VVC real-time decoding library used by MP4Client.

- ♦ Akamai provided content delivery network (CDN) infrastructure supporting HTTP chunk transfer encoding to enable low latency.

- Check out our blog to learn more about the Leveraging Deep Learning Algorithms in Speech Synthesis.

- Cisco, "Cisco annual internet report (2018–2023) white paper," 2020.

- Arulrajah and E. Marketing, "The world is in a state of flux. is your website traffic, too?" March 2021.

- Efoui-Hess, "Climate crisis: The un- sustainable use of online video. The practical case for digital sobriety," July 2019.

- "Jct-vc - joint collaborative team on video coding," https://www.itu.int/en/ITU-T/studygroupsaspx, 2010.

- J. Sullivan, J. M. Boyce, Y. Chen, J. Ohm, C. A. Segall, and A. Vetro, "Standardized extensions of high efficiency video coding (hevc)," IEEE Journal of Selected Topics in Signal Processing, vol. 7, no. 6, pp. 1001– 1016, December 2013.

- J. Sullivan and T. Wiegand, "Video compression - from concepts to the h.264/avc standard," Proceedings of the IEEE, vol. 93, no. 1, pp. 18–31, January 2005.

- K. Tan, R. Weerakkody, M. Mrak, N. Ramzan, V. Baroncini, J.-R. Ohm, and G. J. Sullivan, "Video quality evaluation methodology and verification testing of hevc compression performance," IEEE Transactions on Circuits and Systems for Video Technology, vol. 26, no. 1, pp.76–90, 2016.

- Bross, J. Chen, J.-R. Ohm, G. J. Sullivan, and Y.-K. Wang, "Developments in international video coding standardization after avc, with an overview of versatile video coding (vvc)," Proceedings of the IEEE, vol. 109, no. 9, pp. 1463–1493, 2021.

- Bross, Y.-K. Wang, Y. Ye, S. Liu, J. Chen, G. J. Sullivan, and J.- R. Ohm, "Overview of the versatile video coding (vvc) standard and its applications," IEEE Transactions on Circuits and Systems for Video Technology, vol. 31, no. 10, pp. 3736–3764, 2021.

- Sidaty, W. Hamidouche, O. Deforges, P. Philippe, and J. Fournier,' "Compression Performance of the Versatile Video Coding: HD and UHD Visual Quality Monitoring," in 2019 Picture Coding Symposium (PCS), 2019, pp. 1–5

- -W. Huang, J. An, H. Huang, X. Li, S. Hsiang, K. Zhang, H. Gao, J. Ma, , and O. Chubach, "Block partitioning structure in the vvc standard," IEEE Transactions on Circuits and Systems for Video Technology, pp. 3818–3833, 2021.

- Pfaff, A. Filippov, S. Liu, X. Zhao, J. Chen, S. De-Luxan-Hern' andez,' T. Wiegand, V. Rufitskiy, A. Ramasubramonian, and G. Van der Auwera, "Intra prediction and mode coding in vvc," IEEE Transactions on Circuits and Systems for Video Technology, pp. 3834 – 3847, 2021.

- Zhao, S.-H. Kim, Y. Zhao, H. E. Egilmez, M. Koo, S. Liu, J. Lainema, and M. Karczewicz, "Transform coding in the vvc standard," IEEE Transactions on Circuits and Systems for Video Technology, pp. 3834 – 3847, 2021.

- Karczewicz, N. Hu, J. Taquet, C.-Y. Chen, K. Misra, K. Andersson, P. Yin, T. Lu, E. Franc¸ois, and J. Chen, "Vvc in-loop filters," IEEE Transactions on Circuits and Systems for Video Technology, pp. 3907 – 3925, 2021.

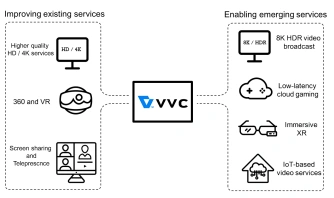

The need for more efficient codecs has arisen from different sectors of the video delivery ecosystem, as the choice of codec plays a critical role in their success during the coming years. This includes different applications on different transport mediums, and VVC is consistently being considered as one of the main options. The VVC standard covers a significantly wider range of applications compared to previous video codecs. This aspect is likely to have a positive impact on the deployment cost and interoperability issue of solutions based on VVC. Thanks to its versatility and high capacity to address the upcoming compression challenges, VVC can be used both for improving existing video communication applications and enabling new ones relying on emerging technologies, which are illustrated in Figure 1.

To properly address market needs and be deployed at scale, VVC shall be referenced and adopted by application-oriented standards developing organization (SDO) specifications. Organizations such as digital video broadcast (DVB), 3rd generation partnership projects (3GPP) or advanced television systems committee standards (ATSC) are defining receivers' capabilities for broadcast and broadband applications and are thus critical to fostering VVC adoption in the ecosystem. Apart from its intrinsic performance (complexity and compression), the successful adoption of a new video codec also relies on its licensing structure.

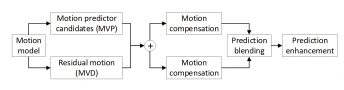

In this section, we give a brief description of the VVC coding tools to understand the improvements regarding its predecessors. Figure 2 illustrates the block diagram of a VVC encoder. This latter relies on a conventional hybrid prediction/transform coding. The VVC encoder is composed of seven main blocks, including 1) luma forward mapping, 2) picture partitioning, 3) prediction, 4) transform/quantization, 5) inverse transform/quantization, 6) in-loop filters, and 7) entropy coding. These seven blocks are briefly described in this section. The luma forward mapping step is normative and relies on the luma mapping with chroma scaling (LMCS) tool, which is described in the in-loop filters section. For a more exhaustive description of VVC high-level syntax [15] or VVC coding tools and profiles, the reader may refer to the overview paper [9].

A.Picture partitioningThe first step of the picture partitioning block splits the picture into blocks of equal size, named coding tree unit (CTU). The maximum CTU size is 128 × 128 samples in VVC and is composed of one or three coding tree blocks (CTBs) depending on whether the video signal is monochrome or contains three-colour components.

B.Intra-coding toolsThe coding principle takes advantage of spatial correlation existing in local image texture. To decorate-relate them, a series of coding tools are provided in VVC, which are tied with partitioning and a set of intra-prediction modes (IPMs).

C.Intercoding toolsIntercoding relies on the inter-prediction of motion and texture data from previously reconstructed pictures stored in the decoded picture buffer (DPB).

Block diagram of the VVC inter-prediction process

Intra sub-partitions (ISP) tool allows splitting an intra block into two or four sub-blocks, each having its separate residual block while sharing one single intra mode. The initial motivation behind this tool is to allow short-distance intra-prediction of blocks with non-stationary texture by sequential processing of sub-partitions. On thin, non-square blocks, this scheme can result in sub-partitions coded with 1-D residual blocks, providing the closest possible reference lines for intra-prediction.

The matrix-based intra-prediction (MIP) in VVC is a new tool designed by an AI-based data-driven method. MIP modes replace the functionality of conventional IPMs by matrix multiplication of reference lines instead of their directional projection. The process of predicting a block with MIP consists of three main steps: 1) averaging with sub-sampling, 2) matrix-vector multiplication and 3) linear interpolation. The data-driven aspect of MIP is expressed in the second step, where a set of matrices are pre-trained and hard-coded in the VVC specification. This set is designed to provide distinct matrices for different combinations of block size and internal MIP mode.

D.In-loop filtersThe picture partitioning and the quantization steps used in VVC may cause coding artifacts such as block discontinuities, ringing artifacts, mosquito noise, or texture and edge smoothing. Four in-loop filters are thus defined in VVC to alleviate these artifacts and enhance the overall coding efficiency [19]. The VVC in-loop filters are deblocking filter (DBF), sample adaptive offset (SAO), an adaptive loop filter (ALF) and cross-component adaptive loop filtering (CC-ALF). In addition, the LMCS is a novel tool introduced in VVC that performs both luma mapping to the luma prediction signal in inter mode and chroma scaling to residuals after inverse transform and inverse quantization. The DBF is applied on block boundaries to reduce the blocking artifacts. The SAO filter is then applied to the deblocked samples. The SAO filter first classifies the reconstructed samples into different categories. Then, for each category, an offset value retrieved by the entropy decoder is added to each sample of the category.

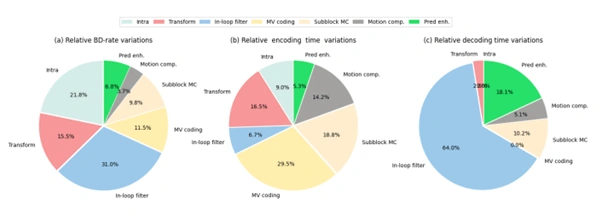

In order to assess the benefits of the VVC coding tools described in previous sections, a "tool of" test has been performed, consisting of each tool, in evaluating the coding cost variations between an encoding setting with all tools enabled, compared to an encoding setting with all tools enabled except the tested tool. A set of 42 UHD sequences, not included in the common test sequences used during the VVC development process, has been used. This set includes sequences of various texture complexity, motion amplitude and local variations, and frame rates (from 30 to 60 frames per second). The evaluation has been performed in random access (RA) configuration, with a group of pictures (GOP) size of 32 and one intra frame inserted every 1 second, using the VVC reference software (VTM11.0). The evaluation focuses on the main new tools supported by VVC and not present in HEVC (except the partitioning and the entropy coding parts, which are not considered in this evaluation).

As of now, VVC benefits from both industrial and open-source implementations, contributing to the emergence of an end-to-end value chain. For example, manufacturers, universities and research institutes have announced the availability of fast VVC implementations [25]. Among existing solutions, the INSA Rennes real-time OpenVVC decoder, the Fraunhofer Heinrich Hertz Institute VVdeC decoder [26], the VVenC encoder [27] and the ATEME TitanLive encoding platform have been developed during the last months.

Relative contributions of the main VVC tools' categories in RA coding configuration

Using the previously described tools, some world-first VVC in field trials [34], [35] have been implemented as described in the following subsections.

A. World-First OTA Broadcasting with VVC

B.World-First OTT Delivery with VVC

C.World-First 4K Live OTT

Channel with VVC The trial depicted in Figure 5c took place in September 2020 and is the result of a collaboration between the following entities:

Conclusion

In this paper, we have addressed several important aspects of the latest video coding standard VVC, from market use cases, coding tools description, per-tool coding efficiency and complexity assessments to the description of real-time implementations of VVC codecs used in early commercial trials. Real-time implementations of VVC codecs, as well as their adoption by application standards, are essential to ensure a wide adoption and a successful deployment of the VVC standard. The current status of the developed real-time VVC codecs and the demonstrated end-to-end VVC transmissions over broadcast and OTT communication mediums clearly show that the VVC technology is mature enough and ready for real deployment on consumer electronic products. We predict that VVC will be integrated into most of the consumer electronics devices in the near future.

References

Related Services

Our academic writing and marking services can help you!

Study Resources

Free resources to assist you with your university studies!